Open Journal of Mathematical Analysis

ISSN: 2616-8111 (Online) 2616-8103 (Print)

DOI: 10.30538/psrp-oma2018.0024

Identification of a diffusion coefficient in degenerate/singular parabolic equations from final observation by hybrid method

Khalid Atifi\(^{1}\), El-Hassan Essoufi, Hamed Ould Sidi

Université Hassan Premier Faculté des sciences et techniques Département de Mathématiques et Informatique Laboratoire MISI Settat, Maroc.; (K.A & E.E & E.O.S)

\(^{1}\)Corresponding Author; k.atifi.uhp@gmail.com

Abstract

Keywords:

1. Introduction

This article is devoted to the identification of a diffusion coefficient in degenerate/singular parabolic equation by the variational method, from final data observation. The problem we treat can be stated as follows:Consider the following degenerate parabolic equation with singular potential

The functional \(J\) is non convex, any descent algorithm will be stopped at the meeting of the first local minimum. To stabilize this problem, the classical method is to add to \(J\) a regularizing term coming from the regularization of Tikhonov. So, we obtain the functional

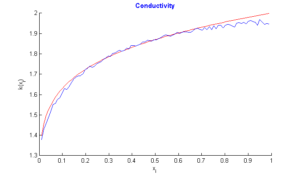

To show the difficulty of determining the parameter \(\varepsilon\) when we have a partial knowledge of \(k ^{b}\) (example 20%) in points of space, we did several test for different epsilon values, the results are as follows

| \(\varepsilon\) | Minimum value of \(J\) |

|---|---|

| 1 | \(2.230238.10^{-02}\) |

| \(10^{-01}\) | \(1.277236.10^{-02}\) |

| \(10^{-02}\) | \(1.093206.10^{-02}\) |

| \(10^{-03}\) | \(2.093763.10^{-02}\) |

| \(10^{-04}\) | \(3.029143.10^{-02}\) |

| \(10^{-05}\) | \(2.92163.10^{-03}\) |

| \(10^{-06}\) | \(1.12187.10^{-02}\) |

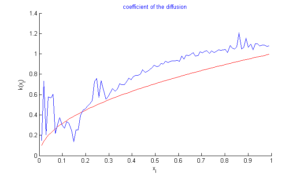

Figure 1. Graph of \(k\) in case \(\varepsilon=10^{-05}\)

To overcome this problem, in case where \(k ^{b}\) is partially known, we propose in this work, a new approach based on Genetic Hybrid algorithms which consists to minimize the functional \(J\) without any regularization. This work is the continuity of [5] where the authors identify the initial state of a degenerate parabolic problem.

Firstly, with the aim of showing that the minimization problem and the direct problem are well-posed, we prove that the solution's behavior changes continuously with respect to the initial conditions. Secondly, we show that the minimization problem has at least one minimum. Finally, The gradient of the cost function is computed using the adjoint state method. Also we present some numerical experiments to show the performance of this approach.

2. Well-posedness

Now we specify some notations we shall use. Let introduce the following functional spaces (see [6, 7, 8])The weak formulation of the problem (1) is :

Theorem 2.1. (see [7, 10]) If \(f=0\) then for all \(u\in L^2(\Omega)\), the problem (1) has a unique weak solution

Theorem 2.2. (see [1])

For every \(u_0\in L^2(\Omega)\) and \(f\in L^2(Q_T),\) where \(Q_T=((0,T)\times\Omega)\) there exists a unique solution of problem (1). In particular, the operator \(\mathcal{O}: D(\mathcal{O})\longmapsto L^2(\Omega)\) is non positive and self-adjoint in \(L^2(\Omega)\) and it generates an analytic contraction semigroup of angle \(\pi/2.\) Moreover, let \(u_0\in D(\mathcal{O})\); then

\(f\in W^{1,1}(0,T;L^2(\Omega)\Rightarrow u\in C^0(0,T;D(\mathcal{O}))\cap C^1([0,T];L^2(\Omega)),\)

\(f\in L^2(L^2(Q_T))\Rightarrow u\in H^1(0,T;L^2(\Omega).\)

Theorem 2.3. Let \(u\) the weak solution of (1), the function

Proof. [Proof of Theorem (2.3)] Let \(\delta k \in H^1(\Omega)\) a small variation such that \(k+\delta k \in A_{ad}\) and \(u_0\in D(\mathcal{O}).\) Consider \(\delta u =u ^{\delta }-u\), with \(u\) is the weak solution of (1) with diffusion coefficient \(k\), and \(u ^{\delta }\) is the weak solution of the following problem (17) with diffusion coefficient \(k^{\delta }=k+\delta k.\)

there is \(C>0,\) such that

3. Gradient of \(J\)

We define the Gâteaux derivative of \(u\) at \(k\) in the direction \(h\in L^2( \Omega \times ] 0,T[)\), by\begin{align*} \int_{0}^{T}\int_{0}^{1}\frac{\partial \hat{u}}{\partial t}p =\int_{0}^{1}\left [ \hat{u}p \right ]_{0}^{T}dx-\int_{0}^{1}\int_{0}^{T}\frac{\partial p}{\partial t}\hat{u}dtdx, \end{align*} \begin{align*} \int_{0}^{T}\int_{0}^{1}\frac{\partial }{\partial x}\left (kx^{\alpha}\frac{\partial \hat{u}}{\partial x}\right)& p dxdt =\int_{0}^{T}\left [ \left (kx^{\alpha}\frac{\partial \hat{u}}{\partial x}\right )p \right ]_{0}^{1}dt-\int_{0}^{T}\int_{0}^{1}kx^{\alpha}\frac{\partial \hat{u}}{\partial x}\frac{\partial p}{\partial x} dxdt\\ & = \int_{0}^{T}\left [ \left (kx^{\alpha}\frac{\partial \hat{u}}{\partial x}\right )p \right ]_{0}^{1}dt\\ &- \int_{0}^{T}\left [kx^{\alpha}\hat{u}\frac{\partial p}{\partial x}\right ]_{0}^{1}dt+\int_{0}^{T}\int_{0}^{1}\frac{\partial}{\partial x}\left (kx^{\alpha}\frac{\partial p}{\partial x}\right )\hat{u}dxdt\\ & = \int_{0}^{T}\left [ \left (kx^{\alpha}\frac{\partial \hat{u}}{\partial x}\right )p \right ]_{0}^{1}dt+\int_{0}^{T}\int_{0}^{1}\frac{\partial}{\partial x}\left (kx^{\alpha}\frac{\partial p}{\partial x}\right )\hat{u}dxdt.\\ \end{align*} \begin{align*} \int_{0}^{T}\int_{0}^{1}p\left (\frac{\partial}{\partial x}\left (hx^{\alpha}\frac{\partial u}{\partial x}\right ) \right )dxdt & =\int_{0}^{T}\left [hx^{\alpha} \frac{\partial u}{\partial x}p \right ]_{0}^{1}dt-\int_{0}^{T}\int_{0}^{1} h\frac{\partial u}{\partial x}\frac{\partial p}{\partial x}dxdt. \end{align*} We pose \(\quad p(x=1,t)=0,\quad p(x=0,t)=0, \quad p(x,t=T)=0,\) we obtain

4. Numerical scheme

Step 1. Full discretization

Discrete approximations of these problems need to be made for numerical implementation. To resolve the direct problem and adjoint problem, we use

the Method \(\theta\)-schema in time.

This method is unconditionally stable for \(1 >\theta \geq \dfrac{1}{2}.\)

Let \(h\) the steps in space and \(\Delta t\) the steps in time.

Let

\begin{equation*}

x_{i}=ih, \ \ \ \ i\in \left\{ 0,1,2...N+1\right\},

\end{equation*}

\begin{equation*}

c(x_{i})=a(x_{i}),

\end{equation*}

\begin{equation*}

t_{j}=j\Delta t, \ \ \ j\in \left\{0,1,2...M+1\right\},

\end{equation*}

\begin{equation*}

f_{i}^{j}=f(x_{i},t_{j}),

\end{equation*}

we put

Step 2. Discretization of the functional \(J\)

5. Genetic algorithme and Hybrid methode

The Genetic Algorithms (GA) are adaptive search and optimization methods that are based on the genetic processes of biological organisms. Their principles have been first laid down by Holland. The aim of GA is to optimize a problem-defined function, called the fitness function. To do this, GA maintain a population of individuals (suitably represented candidate solutions) and evolve this population over time. At each iteration, called generation, the new population is created by the process of selecting individuals according to their level of fitness in the problem domain and breeding them together using operators borrowed from natural genetics, as, for instance, crossover and mutation. As the population evolves, the individuals in general tend toward the optimal solution. The basic structure of a GA is the following:1. Initialize a population of individuals;

2. Evaluate each individual in the population;

3. while termination criterion not reached do

{

4. Select individuals for the next population;

5. Apply genetic operators (crossover, mutation) to produce new individuals;

6. Evaluate the new individuals;

}

7. return the best individual.

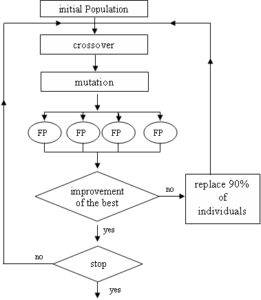

The hybrid methods combine principles from genetic algorithms and other optimization methods. In this approach, we will combine the genetic algorithm with method descent (steepest descent algorithm (FP)) as following:

We assume that we have a partial knowledge of background state \(k^b\) at certain points \((x_i)_{i\in I}\), \(I\subset \left\lbrace 1,2,..,N+1\right\rbrace\).

We assume the individual is a vector \(k\), the population is a set of individuals.

The initialization of individual is as following

The main steps for descent method (FP) at each iteration are:

- Calculate \(u^{j}\) solution of (1) with coefficient \(k^j\)

- Calculate \(P^{j}\) solution of adjoint problem

- Calculate the descent direction \(d_{j}=-\nabla J(k^j)\)

- Find \(\displaystyle t_{j}=\underset{t>0}{argmin}\) \(J(k^j+td_{j})\)

- Update the variable \(k^{j+1}=k^j+t_{j}d_{j}\).

The algorithm ends when \(\displaystyle \mid J(k^j)\mid< \mu\), where \(\mu\) is a given small precision. \(t_{j}\) is chosen by the inaccurate linear search by Rule Armijo-Goldstein as following: let \(\alpha_{i}, \beta \in [0,1[\) and \(\alpha>0\)

if \(J(k^{j}+\alpha_i d_{j})\leqslant J(k^{j})+\beta \alpha_{i} d^{T}_{j}d_{j}\)

\(t_{j}=\alpha_i\) and stop

if not

\(\alpha_i = \alpha \alpha_i\).

Figure 2. Hybrid Algorithm

6. Numerical experiments

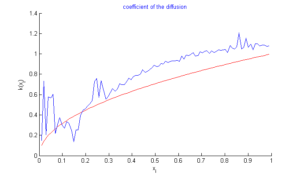

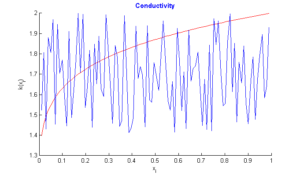

In this section, we do three tests: In the first test, we recall the result obtained by the algorithm of simple descent with \(\varepsilon=10^{-5}\) (Figure 1), In the second test, we turn only the genetic algorithm (Figure 2). Finally, in the third test, we turn test with hybrid approach with parameters \(\alpha=1/3,\)\(\beta=3/4\), \(\lambda=-2/3\), \(N=M=99,\) number of individuals \(=40\) , and number of generations \(=2000\). In the figures below, the exact function is drawn in red and rebuild function in blue.Figure 3. Test with simple descent and \(\varepsilon=10^{-5}\).

Figure 4. Test with genetic algorithm.

Figure 5. Test with hybrid algorithm.

7. Conclusion

We have presented in this paper a new approach based on a hybrid genetic algorithm for the determination of a coefficient in the diffusion term of some degenerate /singular one-dimensional linear parabolic equation from final data observations. Firstly, with the aim of showing that the minimization problem and the direct problem are well-posed, we have proved that the solution's behavior changes continuously with respect to the initial conditions. Secondly, we have shown that the minimization problem has at least one minimum. Finally, we proved the differentiability of the cost function, which gives the existence of the gradient of this functional, that is computed using the adjoint state method. Also we have presented some numerical experiments to show the performance of this approach to construct the diffusion coefficient of a degenerate parabolic problem.Competing Interests

The authors declares that he has no competing interests.References

- Vogel, C. R. (2002). Computational methods for inverse problems (Vol. 23). SIAM Frantiers in applied mathematics. [Google Scholor]

- Engl, H. W., Hanke, M., & Neubauer, A. (1996). Regularization of inverse problems (Vol. 375). Springer Science & Business Media. [Google Scholor]

- Hansen, P. C. (1992). Analysis of discrete ill-posed problems by means of the L-curve. SIAM review, 34(4), 561-580. [Google Scholor]

- Tikhonov, A. N., Goncharsky, A., Stepanov, V. V., & Yagola, A. G. (2013). Numerical methods for the solution of ill-posed problems (Vol. 328). Springer Science & Business Media. [Google Scholor]

- Atifi, K., Balouki, Y., Essoufi, E. H., & Khouiti, B. (2017). Identifying initial condition in degenerate parabolic equation with singular potential. International Journal of Differential Equations, 2017. [Google Scholor]

- Alabau-Boussouira, F., Cannarsa, P., & Fragnelli, G. (2006). Carleman estimates for degenerate parabolic operators with applications to null controllability. Journal of Evolution Equations, 6(2), 161-204. [Google Scholor]

- Hassi, E. M., Khodja, F. A., Hajjaj, A., & Maniar, L. (2013). Carleman estimates and null controllability of coupled degenerate systems. J. of Evol. Equ. and Control Theory, 2, 441-459. [Google Scholor]

- Cannarsa, P., & Fragnelli, G. (2006). Null controllability of semilinear degenerate parabolic equations in bounded domains. Electronic Journal of Differential Equations (EJDE)[electronic only], 136, 1-20.[Google Scholor]

- Emamirad, H., Goldstein, G., & Goldstein, J. (2012). Chaotic solution for the Black-Scholes equation. Proceedings of the American Mathematical Society, 140(6), 2043-2052.[Google Scholor]

- Vancostenoble, J. (2011). Improved Hardy-Poincaré inequalities and sharp Carleman estimates for degenerate/singular parabolic problems. Discrete Contin. Dyn. Syst. Ser. S, 4(3), 761-790. [Google Scholor]

- Hasanov, A., DuChateau, P., & Pektaş, B. (2006). An adjoint problem approach and coarse-fine mesh method for identification of the diffusion coefficient in a linear parabolic equation. Journal of Inverse and Ill-posed Problems jiip, 14(5), 435-463.[Google Scholor]