Open Journal of Mathematical Analysis

ISSN: 2616-8111 (Online) 2616-8103 (Print)

DOI: 10.30538/psrp-oma2018.0026

Higher order nonlinear equation solvers and their dynamical behavior

Sabir Yasin\(^1\), Amir Naseem

Department of Mathematics, University of Lahore, Pakpattan Campus, Lahore Pakistan.; (S.Y)

Department of Mathematics, University of Management and Technology, Lahore Pakistan.;(A.N)

\(^{1}\)Corresponding Author; sabiryasin77@gmail.com

Abstract

Keywords:

1. Introduction

One of the most important problems is to find the values of \(x\) which satisfy the equation $$ f(x)=0. $$ The solution of these problems has many applications in applied sciences. In order to solve these problems, various numerical methods have been developed using different techniques such as adomian decomposition, Taylor's series, perturbation method, quadrature formulas and variational iteration techniques [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21 22] and the references therein.One of the most famous and oldest method for solving non linear equations is classical Newton's method which can be written as:

Modifications of Newton's method gave various iterative methods with better convergence order. Some of them are given in [3, 8 9, 10, 11 18, 19] and the references therein. In [23], Traub developed following Double Newton's method: $$y_{n}=x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})},$$ $$x_{n+1}=y_{n}-\frac{f(y_{n})}{f^{\prime }(y_{n})},\,\,\,n=0,1,2,3,...$$ This method is also known as Traub's Method.

Ostrowsk' method (see [24, 25, 26]) is also a well known iterative method which has forth order convergence. $$y_{n}=x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})},$$ $$x_{n+1}=y_{n}-\frac{f(x_n)f(y_{n})}{f^\prime(x_n)f(x_n)-2f(y_{n})},\,\,\,n=0,1,2,3,...$$ Noor et al. [27] developed modified Halleys's method which has fifth-order convergence $$y_{n}=x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})},$$ $$x_{n+1}=y_{n}-\frac{f(x_n)f(y_{n})f^\prime(y_{n})}{2f(x_n)f^{\prime 2}(y_n)-f^{\prime 2}(x_n)f(y_n)+f^\prime(x_n)f^\prime(y_n)f(y_{n})},\,\,\,n=0,1,2,3,...$$ In this paper, we develop three new iterative methods using variational iteration technique. The variational iteration technique was developed by He [14]. Using this technique, Noor and Shah [17] has suggested and analyzed some iterative methods for solving the nonlinear equations. The purpose of this technique was to solve a variety of diverse problems [14, 15] . Now we have applied the described technique to obtain higher-order iterative methods. We also discuss the convergence criteria of these new iterative methods. Several examples are given to show the performance of our proposed methods as compare to the other similar existing methods. We also compare polynomigraphs of our developed methods with Newton's method, Ostrowski's method, Traub's method and modified Halleys's method.

2. Construction OF Iterative Methods using Variational Technique

In this section, we develop some new sixth order iterative methods for solving non linear equations. By using variational iteration technique, we develop the main recurrence relation from which we derive the new iterative methods for solving non linear equations by considering some special cases of the auxiliary functions \(g\). These are multi-step methods consisting of predictor and corrector steps. The convergence of our methods is better than the one-step methods. Now consider the non-linear equation of the formUsing the optimality criteria, we can obtain the value of \(\lambda\) from (3) as:

2.1. case 1 Let \(g(x_n)=e^{(\beta x_n)}\), then \(g^{\prime}(x_n)= \beta g(x_n)\). Using these values in (12), we obtain the following algorithm.

Using these values in (12), we obtain the following algorithm.

Algorithm 2.1. For a given \(x_{0}\), compute the approximate solution \(x_{n+1}\) by the following iterative schemes: \begin{eqnarray*} y_{n} &=&x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})}-\frac{f^{2}(x_{n})f^{% \prime \prime }(x_{n})}{2f^{\prime 3}(x_{n})}-\frac{f^{3}(x_{n})f^{% \prime \prime \prime }(x_{n})}{6f^{\prime 4}(x_{n})},n=0,1,2,..., \\ x_{n+1}&=&y_n-\frac{f(y_n)}{[f^{\prime }(y_n)+\beta f(y_n)]}. \end{eqnarray*}

2.2. case 2 Let \(g(x_n)=e^{\beta f(x_n)}\), then \(g^{\prime}(x_n)= \beta f^{\prime }(x_{n})g(x_n)\). Using these values in (12), we obtain the following algorithm.Algorithm 2.2. For a given \(x_{0}\), compute the approximate solution \(x_{n+1}\) by the following iterative schemes: \begin{eqnarray*} y_{n} &=&x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})}-\frac{f^{2}(x_{n})f^{% \prime \prime }(x_{n})}{2f^{\prime 3}(x_{n})}-\frac{f^{3}(x_{n})f^{% \prime \prime \prime }(x_{n})}{6f^{\prime 4}(x_{n})},n=0,1,2,..., \\ x_{n+1}&=&y_n-\frac{f(y_n)}{[f^{\prime }(y_n)+\beta f(y_n)f^{\prime }(x_n)]}. \end{eqnarray*}

2.2. case 3 Let \(g(x_n)=e^{-\frac {\beta}{ f(x_n)}}\), then \(g^{\prime}(x_n)= \beta \frac {f^{\prime }(x_n)}{f^2(x_n)}g(x_n)\). Using these values in (12), we obtain the following algorithm.Algorithm 2.3. For a given \(x_{0}\), compute the approximate solution \(x_{n+1}\) by the following iterative schemes: \begin{eqnarray*} y_{n} &=&x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})}-\frac{f^{2}(x_{n})f^{% \prime \prime }(x_{n})}{2f^{\prime 3}(x_{n})}-\frac{f^{3}(x_{n})f^{% \prime \prime \prime }(x_{n})}{6f^{\prime 4}(x_{n})},n=0,1,2,..., \\ x_{n+1}&=&y_n-\frac{f^{2}(x_n)f(y_n)}{[f^{2}(x_n)f^{\prime }(y_n)+\beta f^{\prime}(x_n) f(y_n)]}. \end{eqnarray*}

By assuming different values of \(\beta\), we can obtain different iterative methods. To obtain best results in all above algorithms, choose such values of \(\beta\) that make the denominator non-zero and greatest in magnitude.3. Convergence Analysis

In this section, we discuss the convergence order of the main and general iteration scheme (12).Theorem 3.1. Suppose that \(\alpha \) is a root of the equation \(f(x)=0\). If \(f(x)\) is sufficiently smooth in the neighborhood of \(\alpha \), then the convergence order of the main and general iteration scheme, described in relation (12) is at least six.

Proof. To analysis the convergence of the main and general iteration scheme, described in relation (12), suppose that \(\alpha \) is a root of the equation \(f(x)=0\) and \(e_n\) be the error at nth iteration, then \(e_n=x_n-\alpha\) and by using Taylor series expansion, we have \begin{eqnarray*} f(x)&=&{f^{\prime }(\alpha)e_n}+\frac{1}{2!}{f^{\prime \prime }(\alpha)e_n^2}+\frac{1}{3!}{f^{\prime \prime \prime }(\alpha)e_n^3}+\frac{1}{4!}{f^{(iv) }(\alpha)e_n^4}+\frac{1}{5!}{f^{(v) }(\alpha)e_n^5}\\ &+&\frac{1}{6!}{f^{(vi) }(\alpha)e_n^6}+O(e_n^{7}) \end{eqnarray*}

4. Applications

In this section we included some nonlinear functions to illustrate the efficiency of our developed algorithms for \(\beta = 1\). We compare our developed algorithms with Newton's method (NM)[12] , Ostrowski'si method (OM) [7], Traub's method (TM)[12], and modified Halley's method (MHM) [28]. We used \(\varepsilon =10^{-15}\). The following stopping criteria is used for computer programs:- \(|x_{n+1}-x_{n+1}|< \varepsilon .\)

- \(|f(x_{n+1})|< \varepsilon .\)

| Table 1. Comparison of various iterative methods | ||||

|---|---|---|---|---|

| \(f_{1}=x^{3}+4x^{2}-10,x_{0}=-0.7\) |

||||

| Mrthods | N | \(N_{f}\) | |\(f(x_{n+1})\)| | \(x_{n+1}\) |

| NM | \(20\) | \(40\) | \(1.056394e-24\) | |

| OM | \(51\) |

\(153\) | \(9.750058e-26\) | |

| TM | \(10\) | \(30\) | \(1.056394e-24\) | |

| MHM | \(30\) | \(90\) | \(2.819181e-35\) | \(1.365230013414096845760806828980\) |

| Algorithm 2.1 | \(3\) | \(9\) | \(1.037275e-74\) | |

| Algorithm 2.2 | \(3\) | \(9\) | \(7.962504e-39\) | |

| Algorithm 2.3 | \(3\) | \(9\) | \(2.748246e-39\) | |

| Table 2. Comparison of various iterative methods | ||||

|---|---|---|---|---|

| \(f_{2}=\ln(x)+x,x_{0}=2.6.\) |

||||

| Mrthods | N | \(N_{f}\) | |\(f(x_{n+1})\)| | \(x_{n+1}\) |

| NM | \(8\) | \(16\) | \(6.089805e-28\) | |

| OM | \(4\) |

\(12\) | \(3.421972e-53\) | |

| TM | \(4\) | \(12\) | \(6.089805e-28\) | |

| MHM | \(4\) | \(21\) | \(4.247135e-28\) | \(0.567143290409783872999968662210\) |

| Algorithm 2.1 | \(3\) | \(9\) | \(1.034564e-21\) | |

| Algorithm 2.2 | \(3\) | \(9\) | \(1.994520e-38\) | |

| Algorithm 2.3 | \(3\) | \(9\) | \(7.258268e-15\) | |

| Table 3. Comparison of various iterative methods | ||||

|---|---|---|---|---|

| \(f_{3}=\ln{x}+\cos(x),x_{0}=0.1.\) |

||||

| Mrthods | N | \(N_{f}\) | |\(f(x_{n+1})\)| | \(x_{n+1}\) |

| NM | \(6\) | \(12\) | \(2.313773e-18\) | |

| OM | \(3\) |

\(9\) | \(5.848674e-26\) | |

| TM | \(3\) | \(9\) | \(2.313773e-18\) | |

| MHM | \(4\) | \(12\) | \(1.281868e-60\) | \(0.397748475958746982312388340926\) |

| Algorithm 2.1 | \(2\) | \(6\) | \(2.915840e-34\) | |

| Algorithm 2.2 | \(2\) | \(6\) | \(3.380437e-32\) | |

| Algorithm 2.3 | \(2\) | \(6\) | \(5.839120e-23\) | |

| Table 4. Comparison of various iterative methods | ||||

|---|---|---|---|---|

| \(f_{4}=xe^{x}-1,x_{0}=1.\) |

||||

| Mrthods | N | \(N_{f}\) | |\(f(x_{n+1})\)| | \(x_{n+1}\) |

| NM | \(5\) | \(10\) | \(8.478184e-17\) | |

| OM | \(3\) |

\(9\) | \(8.984315e-40\) | |

| TM | \(3\) | \(9\) | \(2.130596e-33\) | |

| MHM | \(3\) | \(9\) | \(1.116440e-68\) | \(0.567143290409783872999968662210\) |

| Algorithm 2.1 | \(2\) | \(6\) | \(5.078168e-24\) | |

| Algorithm 2.2 | \(3\) | \(9\) | \(4.315182e-82\) | |

| Algorithm 2.3 | \(3\) | \(9\) | \(1.027667e-46\) | |

| Table 5. Comparison of various iterative methods | ||||

|---|---|---|---|---|

| \(f_{5}=x^{3}-1,x_{0}=2.3.\) |

||||

| Mrthods | N | \(N_{f}\) | |\(f(x_{n+1})\)| | \(x_{n+1}\) |

| NM | \(7\) | \(14\) | \(9.883568e-25\) | |

| OM | \(4\) |

\(12\) | \(2.619999e-56\) | |

| TM | \(4\) | \(12\) | \(3.256164e-49\) | |

| MHM | \(3\) | \(9\) | \(3.233029e-46\) | \(1.000000000000000000000000000000\) |

| Algorithm 2.1 | \(2\) | \(6\) | \(1.472038e-16\) | |

| Algorithm 2.2 | \(3\) | \(9\) | \(1.847778e-15\) | |

| Algorithm 2.3 | \(3\) | \(9\) | \(1.620804e-18\) | |

5. Polynomiography

Polynomials are one of the most significant objects in many fields of mathematics. Polynomial root-finding has played a key role in the history of mathematics. It is one of the oldest and most deeply studied mathematical problems. The last interesting contribution to the polynomials root finding history was made by Kalantari [29], who introduced the polynomiography. As a method which generates nice looking graphics, it was patented by Kalantari in USA in 2005 [30, 31]. Polynomiography is defined to be " the art and science of visualization in approximation of the zeros of complex polynomials, via fractal and non fractal images created using the mathematical convergence properties of iteration functions" [29]. An individual image is called a "polynomiograph \textquotedblright . Polynomiography combines both art and science aspects.

Polynomiography gives a new way to solve the ancient problem by using new algorithms and computer technology. Polynomiography is based on the use of one or an infinite number of iteration methods formulated for the purpose of approximation of the root of polynomials e.g. Newton's method , Halley's method etc. The word "fractal" ,which partially appeared in the definition of polynomiography, was coined by the famous mathematician Benoit Mandelbrot [32]. Both fractal images and polynomiographs can be obtained via different iterative schemes. Fractals are self-similar has typical structure and independent of scale. On the other hand, polynomiographs are quite different. The " polynomiographer" can control the shape and designed in a more predictable way by using different iteration methods to the infinite variety of complex polynomials. Generally, fractals and polynomiographs belong to different classes of graphical objects. Polynomiography has diverse applications in math, science, education, art and design. According to Fundamental Theorem of Algebra, any complex polynomial with complex coefficients \(\left\{ a_{n},a_{n-1},...,a_{1},a_{0}\right\} \):

Usually, polynomiographs are colored based on the number of iterations needed to obtain the approximation of some polynomial root with a given accuracy and a chosen iteration method. The description of polynomiography, its theoretical background and artistic applications are described in [29, 30, 31].

5.1. Iteration

During the last century, the different numerical techniques for solving nonlinear equation \(f(x)=0\) have been successfully applied. Now we define our developed algorithms as: \begin{eqnarray*} y_{n} &=&x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})}-\frac{f^{2}(x_{n})f^{% \prime \prime }(x_{n})}{2f^{\prime 3}(x_{n})}-\frac{f^{3}(x_{n})f^{% \prime \prime \prime }(x_{n})}{6f^{\prime 4}(x_{n})},n=0,1,2,..., \\ x_{n+1}&=&y_n-\frac{f(y_n)}{[f^{\prime }(y_n)+\beta f(y_n)]}, \end{eqnarray*} which we call algorithm 2.1 for solving nonlinear equations. \begin{eqnarray*} y_{n} &=&x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})}-\frac{f^{2}(x_{n})f^{% \prime \prime }(x_{n})}{2f^{\prime 3}(x_{n})}-\frac{f^{3}(x_{n})f^{% \prime \prime \prime }(x_{n})}{6f^{\prime 4}(x_{n})},n=0,1,2,..., \\ x_{n+1}&=&y_n-\frac{f(y_n)}{[f^{\prime }(y_n)+\beta f(y_n)f^{\prime }(x_n)]} \end{eqnarray*} which we call algorithm 2.2 for solving nonlinear equations. \begin{eqnarray*} y_{n} &=&x_{n}-\frac{f(x_{n})}{f^{\prime }(x_{n})}-\frac{f^{2}(x_{n})f^{% \prime \prime }(x_{n})}{2f^{\prime 3}(x_{n})}-\frac{f^{3}(x_{n})f^{% \prime \prime \prime }(x_{n})}{6f^{\prime 4}(x_{n})},n=0,1,2,..., \\ x_{n+1}&=&y_n-\frac{f^{2}(x_n)f(y_n)}{[f^{2}(x_n)f^{\prime }(y_n)+\beta f^{\prime}(x_n) f(y_n)]} \end{eqnarray*} which we call algorithm 2.3 for solving nonlinear equations. Let \(p(z)\) be the complex polynomial, then \begin{eqnarray*} y_{n} &=&z_{n}-\frac{p(z_{n})}{p^{\prime }(z_{n})}-\frac{p^{2}(z_{n})p^{% \prime \prime }(z_{n})}{2p^{\prime 3}(z_{n})}-\frac{p^{3}(z_{n})p^{% \prime \prime \prime }(z_{n})}{6p^{\prime 4}(z_{n})},n=0,1,2,..., \\ z_{n+1}&=&y_n-\frac{p(y_n)}{[p^{\prime }(y_n)+\beta p(y_n)]} \end{eqnarray*} which is algorithm 2.1 for solving nonlinear complex equations. \begin{eqnarray*} y_{n} &=&z_{n}-\frac{p(z_{n})}{p^{\prime }(z_{n})}-\frac{p^{2}(z_{n})p^{% \prime \prime }(z_{n})}{2p^{\prime 3}(z_{n})}-\frac{p^{3}(z_{n})p^{% \prime \prime \prime }(z_{n})}{6p^{\prime 4}(z_{n})},n=0,1,2,..., \\ z_{n+1}&=&y_n-\frac{p(y_n)}{[p^{\prime }(y_n)+\beta p(y_n)p^{\prime }(z_n)]} \end{eqnarray*} which is algorithm 2.2 for solving nonlinear complex equations. \begin{eqnarray*} y_{n} &=&z_{n}-\frac{p(z_{n})}{p^{\prime }(z_{n})}-\frac{p^{2}(z_{n})p^{% \prime \prime }(z_{n})}{2p^{\prime 3}(z_{n})}-\frac{p^{3}(z_{n})p^{% \prime \prime \prime }(z_{n})}{6p^{\prime 4}(z_{n})},n=0,1,2,..., \\ z_{n+1}&=&y_n-\frac{p^{2}(z_n)p(y_n)}{[p^{2}(z_n)p^{\prime }(y_n)+\beta p^{\prime}(z_n) f(y_n)]} \end{eqnarray*} Which is algorithm 2.3 for solving nonlinear complex equations. Where \(z_{o}\in\mathbb{C}\) is a starting point. The sequence \( \{z_{n}\}_{n=0}^{\infty }\) is called the orbit of the point \(z_{o}\) converges to a root \(z^{\ast }\) of \(p\) then, we say that \(z_{o}\) is attracted to \(z^{\ast }\). A set of all such starting points for which \( \{z_{n}\}_{n=0}^{\infty }\) converges to root \(z^{\ast }\) is called the basin of attraction of \(z^{\ast }.\)6. Convergence test

In the numerical algorithms that are based on iterative processes we need a stop criterion for the process, that is, a test that tells us that the process has converged or it is very near to the solution. This type of test is called a convergence test. Usually, in the iterative process that use a feedback, like the root finding methods, the standard convergence test has the following form:7. Applications

In this section we present some examples of polynomiographs for different complex polynomials equation \(p(z)=0\) and some special polynomials using our developed algoritms.The different colors of a images depend upon number of iterations to reach a root with given accuracy \(\varepsilon =0.001\). One can obtain infinitely many nice looking polynomiographs by changing parameter \(k,\) where \(k\) is the upper bound of the number of iterations.7.0.1. Polynomiographs Of Different Complex Polynomial In this section, we present polynomiographs of the following complex polynomials, using our developed methods for \(\beta=1\).

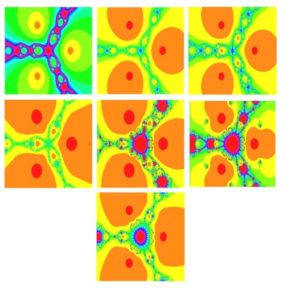

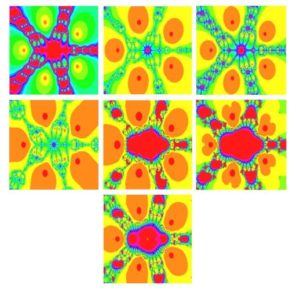

Example 7.1. Polynomiograph for \(z^{2}-1=0,\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 1. Polynomiographs of \(z^{2}-1=0.\)

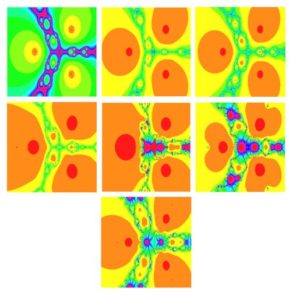

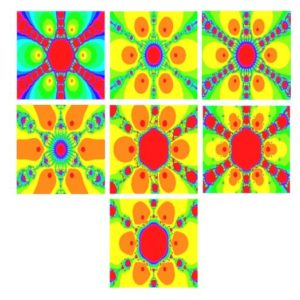

Example 7.2. Polynomiograph for \(z^{3}-1=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 2. Polynomiographs of \(z^{3}-1=0.\)

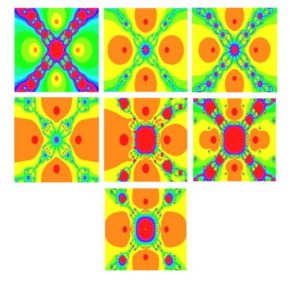

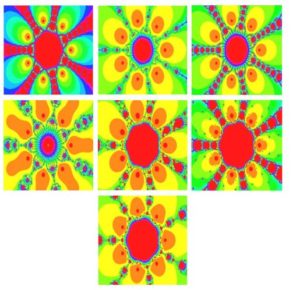

Example 7.3. Polynomiograph for \(z^{3}-z^2+1=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 3. Polynomiographs of \(z^{3}-z^2+1=0.\)

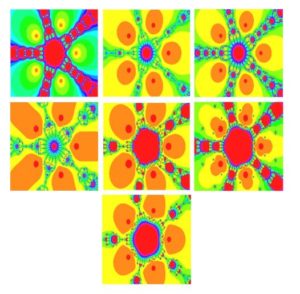

Example 7.4. Polynomiograph for \(z^{4}-1=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 4. Polynomiographs of \(z^{4}-1=0.\)

Example 7.5. Polynomiograph for \(z^{4}-z^2-1=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 5. Polynomiographs of \(z^{4}-z^2-1=0.\)

Example 7.6. Polynomiograph for \(z^{5}-1=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 6. Polynomiographs of \(z^{5}-1=0.\)

Example 7.7. Polynomiograph for \(z^{5}-z^3+2=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 7. Polynomiographs of \(z^{5}-z^3+2=0.\)

Example 7.8. Polynomiograph for \(z^{6}-1=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 8. Polynomiographs of \(z^{6}-1=0.\)

Example 7.9. Polynomiograph for \(z^{6}-z^4+4=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 9. Polynomiographs of \(z^{6}-z^4+4=0.\)

Example 7.10. Polynomiograph for \(z^{7}-1=0\) via Newton's method (row one left figure), Ostrowski's method (row one middle figure), Traub's method (row one right figure), modified Halleys's method (row two left figure), Algorithm (2.1) (row two middle figure), Algorithm (2.2) (row two right figure) and Algorithm (2.3) (row three) are given below

Figure 9. Polynomiographs of \(z^{7}-1=0.\)

Conclusions

We have established three new sixth order iterative methods for solving non linear functions. We solved some test examples to check the efficiency of our developed methods. Table 1-5 shows that our methods perform better than Newton's method, Ostrowski's method, Traub's method and modified Halleys's method. We also compare our methods with Newton's method, Ostrowski's method, Traub's method and modified Halleys's method by presenting polynomiographs of different complex polynomials.Competing Interests

The authors declares that they have no competing interests.References

- Nazeer, W., Naseem, A., Kang, S. M., & Kwun, Y. C. (2016). Generalized Newton Raphson's method free from second derivative. J. Nonlinear Sci. Appl., 9 (2016), 2823, 2831. [Google Scholor]

- Nazeer, W., Tanveer, M., Kang, S. M., & Naseem, A. (2016). A new Householder's method free from second derivatives for solving nonlinear equations and polynomiography. J. Nonlinear Sci. Appl., 9, 998-1007. [Google Scholor]

- Chun, C. (2006). Construction of Newton-like iteration methods for solving nonlinear equations. Numerische Mathematik, 104(3), 297-315.[Google Scholor]

- Burden, R. L., Faires, J. D., & Reynolds, A. C. (2001). Numerical analysis. [Google Scholor]

- Stoer, J., & Bulirsch, R. (2013). Introduction to numerical analysis (Vol. 12). Springer Science & Business Media. [Google Scholor]

- Quarteroni, A., Sacco, R., & Saleri, F. (2010). Numerical mathematics (Vol. 37). Springer Science & Business Media.[Google Scholor]

- Chen, D., Argyros, I. K., & Qian, Q. S. (1993). A note on the Halley method in Banach spaces. Applied Mathematics and Computation, 58(2-3), 215-224. [Google Scholor]

- Gutierrez, J. M., & Hernández, M. A. (2001). An acceleration of Newton's method: Super-Halley method. Applied Mathematics and Computation, 117(2-3), 223-239.[Google Scholor]

- Gutiérrez, J. M., & Hernandez, M. A. (1997). A family of Chebyshev-Halley type methods in Banach spaces. Bulletin of the Australian Mathematical Society, 55(1), 113-130. [Google Scholor]

- Householder, A. S. (1970). The numerical treatment of a single nonlinear equation. McGraw-Hill, New York. [Google Scholor]

- Sebah, P., & Gourdon, X. (2001). Newton’s method and high order iterations. Numbers Comput., 1-10. [Google Scholor]

- Traub, J. F. (1982). Iterative methods for the solution of equations (Vol. 312). American Mathematical Soc..[Google Scholor]

- Inokuti, M., Sekine, H., & Mura, T. (1978). General use of the Lagrange multiplier in nonlinear mathematical physics. Variational method in the mechanics of solids, 33(5), 156-162.[Google Scholor]

- He, J. H. (2007). Variational iteration method—some recent results and new interpretations. Journal of computational and applied mathematics, 207(1), 3-17.[Google Scholor]

- He, J. H. (1999). Variational iteration method–a kind of non-linear analytical technique: some examples. International journal of non-linear mechanics, 34(4), 699-708.[Google Scholor]

- Noor, M. A. (2007). New classes of iterative methods for nonlinear equations. Applied Mathematics and Computation, 191(1), 128-131. [Google Scholor]

- Noor, M. A., & Shah, F. A. (2009). Variational iteration technique for solving nonlinear equations. Journal of Applied Mathematics and Computing, 31(1-2), 247-254. [Google Scholor]

- Kou, J. (2007). The improvements of modified Newton’s method. Applied Mathematics and Computation, 189(1), 602-609.[Google Scholor]

- Abbasbandy, S. (2003). Improving Newton–Raphson method for nonlinear equations by modified Adomian decomposition method. Applied Mathematics and Computation, 145(2-3), 887-893.[Google Scholor]

- Naseem, A., Awan, M. W., & Nazeer, W. (2016). Dynamics of an iterative method for nonlinear equations. Sci. Int.(Lahore), 28(2), 819-823. [Google Scholor]

- Naseem, A., Nazeer, W., \& Awan, M. W. (2016). Polynomiography via modified Abbasbanday’s method. Sci. Int.(Lahore), 28(2), 761-766. [Google Scholor]

- Naseem, A., Attari, M. Y., & Awan, M. W. (2016). Polynomiography via modified Golbabi and Javidi's method. Sci. Int.(Lahore), 28(2).\emph{Sci. Int.(Lahore)}, 28(2), 867-871. [Google Scholor]

- Traub, J. F. (1982). Iterative methods for the solution of equations (Vol. 312). American Mathematical Soc.. [Google Scholor]

- Nawaz. M., Naseem. A., Nazeer, W. (2018). New iterative methods using variational iteration technique and their dynamical behavior. Open. Math. Anal., 2(2), 01-09.

- Noor, M. A., & Shah, F. A. (2009). Variational iteration technique for solving nonlinear equations. Journal of Applied Mathematics and Computing, 31(1-2), 247-254. [Google Scholor]

- Noor, M. A., Shah, F. A., Noor, K. I., & Al-Said, E. (2011). Variational iteration technique for finding multiple roots of nonlinear equations. Scientific Research and Essays, 6(6), 1344-1350. [Google Scholor]

- Noor, M. A., Khan, W. A., & Hussain, A. (2007). A new modified Halley method without second derivatives for nonlinear equation. Applied Mathematics and Computation, 189(2), 1268-1273. [Google Scholor]

- Noor, K. I., & Noor, M. A. (2007). Predictor–corrector Halley method for nonlinear equations. Applied Mathematics and Computation, 188(2), 1587-1591. [Google Scholor]

- Kalantari, B. (2005). U.S. Patent No. 6,894,705. Washington, DC: U.S. Patent and Trademark Office.[Google Scholor]

- Kalantari, B. (2005). Polynomiography: from the fundamental theorem of Algebra to art. Leonardo, 38(3), 233-238. [Google Scholor]

- Kotarski, W., Gdawiec, K., & Lisowska, A. (2012, July). Polynomiography via Ishikawa and Mann iterations. In International Symposium on Visual Computing (pp. 305-313). Springer, Berlin, Heidelberg. [Google Scholor]

- Kalantari, B. (2009). Polynomial root-finding and polynomiography. World Scientific, Singapore. [Google Scholor]